|

| The British Psychologist magazine asks every psychologist they interview for a book recommendation. The list below is an edited version of their compilation. I'd save the James for last -- the percentage of psychologists who have read it is probably even less than the percentage of historians who have read Gibbon's Decline and Fall of the Roman Empire. But I have either enjoyed the books on the list below or plan to read them soon. |

The entire list

William James’s

The Principles of Psychology (1890). "He shares his thinking and questioning with his reader so that one can enter his mind, and live with him as a friend. He combines being a superb communicator with insights of philosophy and science really worth communicating. I was struck by his breadth of mind, respecting the arts from the past as well as technologies for creating future science," said

Richard Gregory, Jun 08.

"The

Principles of Psychology by William James, of course. Not only is it brilliant and prescient, but the quality of the writing is humbling," said

Daniel Gilbert, Jul 08.

"Having referred to it for many years in the context of social facilitation, I was intrigued when I finally read

Norman Triplett’s original 1898 paper and realised how psychologists have misreported his methods, results and conclusions regarding the effects of coactors on performance," said

Sandy Wolfson, Aug 08.

Mistakes Were Made (But Not by Me) by Carol Tavris and Elliot Aronson. "You’ll get to understand why hypocrites never see their own hypocrisy, why couples so often misremember their shared history, why many people persist in courses of action that lead straight into quicksand. It’s lucid and witty, and a delightful read," said

Elizabeth Loftus, Oct 08.

"Impossible question. William James’s

Principles of Psychology for writing style, prescience and insight; Elliot Aronson’s

The Social Animal for its passionate, personal prose and introduction to the major concerns of social psychology; and Judith Rich Harris’s

The Nurture Assumption for its brilliant, creative reassessment of the basic but incorrect assumptions of developmental psychology. Her book is a model of how psychologists need to let data supersede ideology and vested intellectual convictions, and change direction when the evidence demands," said

Carol Tavris, Mar 09.

"Julian Jaynes’s 1976 cult classic

The Origin of Consciousness in the Breakdown of the Bicameral Mind, which makes the startling claim that subjective consciousness (in the sense of internalised mind space) arose a mere 3000 years ago through the development of metaphorical language, a process itself driven by increasing social and cultural complexity. Some of the historical and classical scholarship may be dubious and the neuropsychology is sketchy, but the book is a wonderful imaginative achievement, a pioneering attempt to fuse ancient history, psychology and neuroscience," said

Pauls Broks, Apr 09.

Attachment by John Bowlby. "We are much more likely to read critiques of Bowlby’s theories than the original work. Although his views had a negative impact on the lives of women after the Second World War by putting pressure on mothers to stay at home with their children, he writes beautifully and compellingly about the interactions between infants and their mother. This aspect of his work has been lost to those not closely involved with the study of attachment relationships," said

Susan Golombok, Aug 09.

Semrad: The Heart of a Therapist. "This book is a collection of quotes and anecdotes from Elvin Semrad, a psychiatrist who practised in the United States in the second half of the 20th century. He consistently emphasised that the first and most important task of the trainee practitioner is to learn to sit with the patient, listen to and hear them, and help to stand the pain they could not bear alone. Semrad wouldn’t have agreed with my choice here as he believed ‘the patient is the only textbook we need’," said

David Lavallee, Jun 10.

The Republic, Plato. "It covers so many aspects of social organisation, reminding us that the fundamental questions have been addressed, just as we go on addressing them," said

Margaret McAllister, Feb 11.

"B.F. Skinner’s

The operational analysis of psychological terms (Psychological Review 52, 270–277, 1945) is rarely read and even less often understood. Contrary to some misrepresentations of his position, Skinner never doubted that we can describe internal states such as thoughts or emotions, but he wondered how we are able to do this. His answer was surprising, relevant to the practice of psychotherapy, and a challenge to all those who (like some unsophisticated therapists) assume that we can know our own feelings by a simple process of self-inspection," said

Richard Bentall, Apr 11.

"Michael Rutter’s

Genes and Behaviour. It eloquently and effortlessly gives a comprehensive overview of behaviour genetics."

Essi Viding, September 2011.

"I think

The Selfish Gene by Richard Dawkins was probably the most influential book I read as a student that really shaped my thinking as a psychologist and a human."

Bruce Hood, Jan 12

"

The Man with a Shattered World by A.R Luria. It’s about a Russian soldier who sustained a severe brain injury in World War II. The effort, hope and commitment shown by this man had a big impact on me."

Barbara Wilson, February 12

"The two volumes of William James’s

The Principles of Psychology.

Martin Conway, March 2012.

"The maxims of the

Duc de la Rochefoucauld. Perhaps it’s best to read whatever excites your curiosity at the time. But it might well bore you later on."

Richard Hallam June 2012

"

Advice for a Young Investigator by Ramon y Cajal."

Hugo Spiers September 2012

"Jonathan Haidt’s

The Righteous Mind shows how you can tackle really important themes in a way that is both elegant and precise."

Guy Claxton October 2012

"Michel Foucault,

Madness and Civilisation: A History of Insanity in the Age of Reason (1967, Tavistock)." From

Jane Ussher December 2012

"

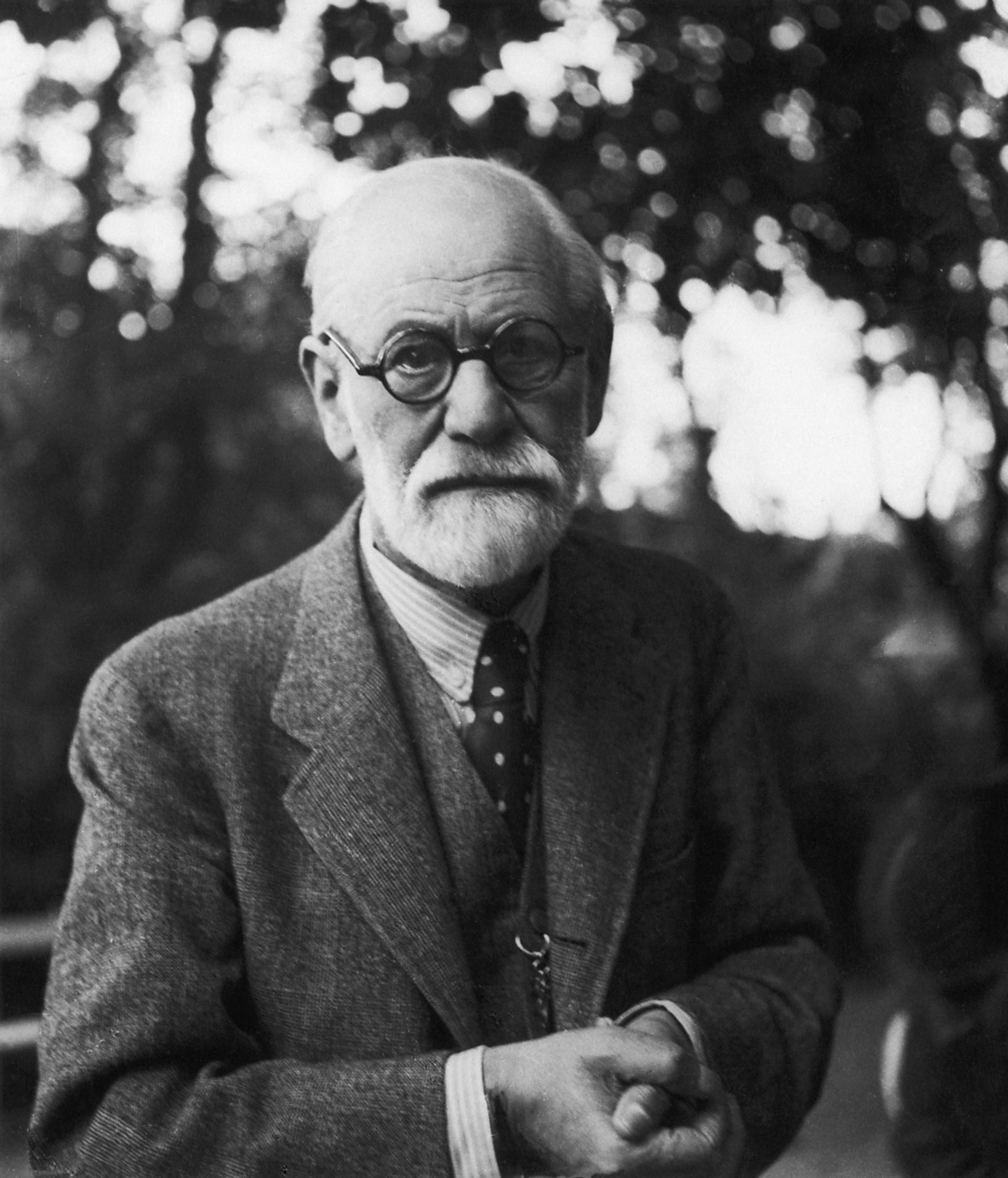

The Interpretation of Dreams by Freud. It was the first psychology book I read, having borrowed it from the library at the age of 15. By the end of it I was convinced dreams were the ‘royal road to the unconscious’ and that psychology was for me."

Dame Glynis Breakwell January 2013

"William James’

Principles of Psychology because it shows how good psychologists can be outside a narrow box of thinking."

Shivani Sharma March 2013

"Paul Johnson’s book

The Intellectuals (1998) in which he looks at the difference between what the great thinkers – Marx, Rousseau, Ibsen, Russell and many more – said and what they actually did. Insightful, inspiring, an exemplar of good historical research and profound."

Stephen Murgatroyd June 2013

"

Man’s Search for Meaning. Viktor E. Frankl. A book that might not offer the answer – but it certainly offers an answer to the vexed question of how to live."

Frank Tallis September 2013

"

Thinking, Fast and Slow, a dual processing account of decision making from Nobel Prize laureate Daniel Kahneman. Accessible, a treasure trove of knowledge, and a real fun read."

Shira Elqayam March 2014

"An essay written in 1946 by George Orwell called ‘Politics and the English language’. Like politics, psychology can sometimes fall victim to its own jargon and conceptual confusions. This essay helps you to write well."

Valerie Curran September 2014

"

The Superstition of the Pigeon by B.F. Skinner: a classic told delightfully."

Aleks Krotoski January 2015

"

Freud’s Civilisation and Its Discontents. I think this is one of the seminal books in applied psychology, although it is classified as political philosophy. I first read it as a sixth-former in Ceylon. Coming from a Buddhist background my world-view was not so different from Freud’s (‘eros’ and ‘thanatos’). Like Freud, I became an atheist and freethinker."

Migel Jayasinghe, February 2015

"

One Flew Over the Cuckoo’s Nest, because it reminds us that the power of the ‘expert’ is easy to abuse."

Jo Silvester, October 2015