From the New Yorker:

Imagine that you are the captain of a pirate ship. You’ve captured some booty, and you need to divide it among your crew. But first the crew will vote on your plan. If you have the support of fewer than half of them, you will die. How do you propose to divide the gold, so that you still have some for yourself—but live to tell the tale?

There is a correct answer: divide it among the top fifty-one per cent of the crew. If you knew that, you’ve passed what used to be one of Google’s infamous, mind-scrambling job-interview questions, which would have placed you one step closer to a career at the technology giant. (Google reportedly banned the practice a couple of years ago.) In a surprising June 19th interview with the New York Times, Laszlo Bock, Google’s senior V.P. of “people operations,” explained why: the company discovered these brainteasers are “a complete waste of time,” and “don’t predict anything” when it comes to job success. Google shouldn’t be shocked. A psychologist would have known at the outset that tests of this nature hardly ever work, and that there are much better predictors of who will get hired and how they will perform.

Researchers have always tried to use psychology for predictive ends: Can what we already know about a person tell us how she will behave in a given situation? The results of these endeavors have been mixed. While there is some evidence for links between certain personality traits and later outcomes, the correlations tend to be limited, and the predictions that can be made are broad at best. For instance, we can tell when a given person will generally succeed at academic pursuits, but not if she’ll excel in a particular seminar on ancient hieroglyphics.Wait a minute -- first of all, those Google brain teasers are not "personality tests" but cognitive reasoning tests. Secondly, there is plenty of evidence (e.g., Schmidt & Hunter, 1998) that demonstrates the very strong predictive ability of intelligence tests and personality measurement (especially when used in combination). A test of General Mental Ability and a test of Conscientious predicts overall job performance across a wide range of jobs with a validity coefficient of 0.60. That's not quite as good as a GMA test and a Structured Interview (i.e., asking all the candidates the same questions and then rating their responses), which is 0.63, but it beats a GMA test and an unstructured interview (0.55). By the way, an unstructured interview alone, which is the way most hiring is done, predicts overall job performance at only 0.38 (which is still better than hiring at random).

The major problem with most attempts to predict a specific outcome, such as interviews, is decontextualization: the attempt takes place in a generalized environment, as opposed to the context in which a behavior or trait naturally occurs. Google’s brainteasers measure how good people are at quickly coming up with a clever, plausible-seeming solution to an abstract problem under pressure.

But employees don’t experience this particular type of pressure on the job. What the interviewee faces, instead, is the objective of a stressful, artificial interview setting: to make an impression that speaks to her qualifications in a limited time, within the narrow parameters set by the interviewer. What’s more, the candidate is asked to handle an abstracted “gotcha” situation, where thinking quickly is often more important than thinking well. Instead of determining how someone will perform on relevant tasks, the interviewer measures how the candidate will handle a brainteaser during an interview, and not much more.Actually, the problem with Google's hiring procedure is called restriction of range. When everyone you are interviewing is super-smart, intelligence is not going to predict job performance. Think about it -- every one you hire is super-smart, and some of them will perform better than the others you hire. Even your relative failures are super-smart. So does that mean intelligence doesn't predict success at Google? Nonsense. If they want to, they could try hiring some people who couldn't crack 1200 on the SAT and see how well they do.

So those brain teasers didn't predict job performance at Google because everyone you are interviewing is super-smart already and some of them got your puzzles right (maybe because they think better on their feet, or maybe because they recognized the format of the puzzle) and some of them didn't. The reason they can dump the brainteasers from the interview is that they are already screening for high cognitive ability (B.S. degree in Electrical Engineering from Stanford? Come on in for an interview!).

College GPAs didn't predict performance either, but only because there's no much difference between a guy who earned a 3.7 GPA in Electrical Engineering at Stanford and a guy who earned a 3.3. Again, this is a problem called restriction of range, not a problem with selection tests.

Interviews in general pose a particular challenge when it comes to predictive validity—that is, the ability to determine someone’s future performance based on limited data. Not only are they relatively brief but also, over the past twenty years, psychologists have repeatedly found that few of a candidate’s responses matter. What is significant is the personal impression that the interviewer forms within the first minute (and sometimes less) of meeting the prospective hire. In one study, students were recorded as they took part in mock on-campus recruiting interviews that lasted from eight to thirty minutes.

The interviewers evaluated them based on eleven factors, such as over-all employability, professional competency, and interpersonal skills. The experimenters then showed the first twenty or so seconds of each interview to untrained observers—the initial meet-and-greet, starting with the interviewee’s knock on the door and ending ten seconds after he was seated, before any questions—and asked them to rate the candidates on the same dimensions. What the researchers found was a high correlation between judgments made by the untrained eye in a matter of seconds and those made by trained interviewers after going through the whole process. On nine of the eleven factors, there was a resounding agreement between the two groups.

This phenomenon is broadly known as “thin-slice” judgment. As early as 1937, Gordon Allport, a pioneer of personality psychology, argued that we constantly form sweeping opinions of others based on incredibly limited information and exposure. Since then, multiple studies have shown the truth of that observation: first impressions are paramount. Once formed, they reliably color the rest of our impression formation. The exact same interview response given by two different candidates, one of whom the interviewer preferred, would be rated differently.So the author is suggesting that we go on first impressions instead of interviewing? Ambady's research is really cool (even though most people think that Malcolm Gladwell did it) but I'm not sure it applies to selection methods. On the other hand, if a candidate meets with several members of your team (including the administrative assistants!) and somebody doesn't like him or her, for whatever reason, or even for no reason, then you will never regret giving that person a pass.

Bottom line: Don't trust your initial positive impressions (even psychopaths are charismatic!) but NEVER ignore a twinge of negative reaction that you just can't put into words. You, or someone else in the organization, sensed that there was something "off" about the candidate.

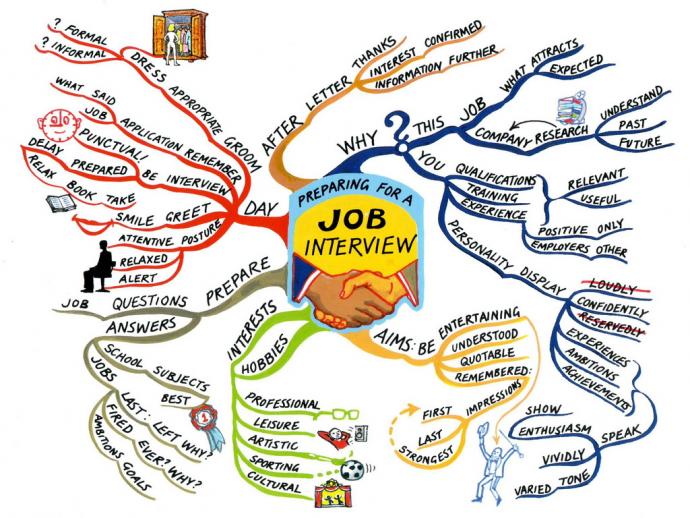

Given the failure of typical interviews to predict job performance consistently, what should companies do instead? Two things have been shown to make the interview process more successful. One is using a highly standardized interview process—for instance, asking each candidate the same questions in the same order. This produces a more objective measure of how each candidate fares, and it can reduce the influence of thin-slice judgment, which can alter the way each interview is conducted.

The other solution is to focus on relevant behavioral measures, both in the past and in the future. The ubiquitous interview question “Describe a situation where you did well on X or failed on Y” is an example of a past behavioral measure; asking a programmer to describe how she would solve a particular programming task would be a future measure. Google and many other tech companies may also ask some candidates to write code on the spot, a task that solves the problem of decontextualization by closely approximating what they would do on the job.

To Google’s credit, the company admitted its failure, and moved its interviews in a different direction. Finding the one right candidate in a group is hard, and companies don’t have much time to figure out exactly which questions can help them tell similar-seeming candidates apart. Or, to quote from another of the banned Google questions, “You have eight balls of the same size. Seven of them weigh the same, and one of them weighs slightly more. How can you find the ball that is heavier by using a balance and only two weighings?”One thing that Google doesn't have to deal with as much as other organizations is selling the candidate on the job. The selection process has two goals -- determining which candidate is the best "fit" for the open position, and getting the candidate to want the job. A good reason to jettison the brainteaser questions would be that they annoy job candidates that you want to seduce. And, again, brainteasers are redundant because you have already screened for high cognitive ability with the c.v. (college and major).

A work sample might be a better applicant test for Google. Send the candidates you like home with an assignment to code something, solve a business-related problem, prepare a report, etc. Hire the people who turn in the best work.

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.